What is the article about?

The article outlines the basic features of Otto’s Dynamic Pricing service, which is currently used to set prices for the majority of products on otto.de. It further describes how we successfully built the service as a robust and scalable cloud application able to deliver prices for millions of articles on a daily basis. It also explains how we benefited from working on our application in a cross-functional team setup and how we intend to secure the continuous improvement of our service.

Using AI to work out the best prices for products

Let's imagine it’s early June. We have 230 pieces of a nice floral summer dress left in stock. All of them need to be sold by the end of July because we'll require that storage capacity for the upcoming Autumn-Winter assortment. So the question now is, what price will ensure that we neither run out of stock too early nor have unsold stock left at the end of July? Markdown Pricing services address precisely this question.

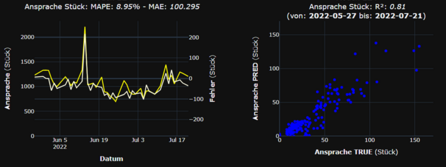

We use Machine Learning models such as OLS Regression, XGBoost and LightGBM to forecast the number of articles that can be sold within the remaining timespan at different prices. We feed these models with historical data on prices and order volumes. As other factors such as competitor prices or marketing campaigns may also largely influence the order volume, we feed this information into the models as well. Incorporating these features helps us make better demand predictions and determine more precisely those changes in demand that are likely to occur exclusively as a result of price modifications. Having a precise prediction model at hand, it is easy to determine the optimal price for the leftover summer dresses.

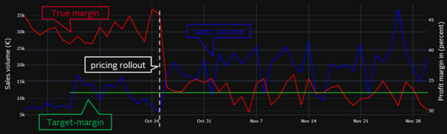

Besides Markdown Pricing we have another application for Dynamic Pricing: Portfolio Optimization. Let’s say we're selling 200 different types of refrigerators. To ensure profitability, the overall margin for the refrigerators must be at least 15 percent. If it falls below that value, there will be no money left on the table to cover OTTO’s costs – so our goal is to maximize our sales volume without falling below the critical margin value.

Again, the demand prediction models come in handy here: they help us differentiate between articles which react strongly to price changes (elastic articles) and those that do not (inelastic articles). We can thus reduce prices for elastic articles because this will trigger a considerable rise in demand. This helps us maximize the overall sales volume by providing our customers with attractive prices. On the other hand, we shouldn’t tweak the prices of inelastic articles because reducing the price without increasing the order volume is losing money – something a retailer does not appreciate.

Figure 2 shows Portfolio Optimization at work. After the rollout, prices were reduced to maximize the sales volume. As a result, the assortment’s overall margin drops – just to the target level, but on average not below that. Fine! Our customers are happy because they benefit from more attractive prices on otto.de, while OTTO is happy because the sales volume grows considerably and the profit margin is still above the critical threshold. Unfortunately, our happiness-spreading Portfolio Optimization is computationally very expensive. The technical challenges resulting from that are discussed in the next section.

Building a robust and scalable pipeline

In 2019 our markdown and portfolio optimization services covered three assortments. We delivered roughly 200,000 prices per week. At that time, OTTO was running one of the largest Hadoop clusters in Europe. Nevertheless, the machines were creaking under the strain of price optimization. During the 'cluster happy hours', when our service shared the machine pool with lots of other performance-hungry applications, the available worker nodes were chewed up in no time. At that time the common log stated: “Application started... Optimization nearly complete... Sorry, you have been preempted... Restarting application”. In other words, relying on shared resources meant our pipeline was neither robust nor scalable.

Knowing that we were currently delivering prices for only three assortments while ten others were still waiting to be onboarded, we had two options: lifting the computationally expensive part of our application to a cloud or trying to scare off our colleagues from the shared resources. As we were a small team of rather weakish developers neither willing nor able to scare anybody off, we chose the first option. With initial support from a team of experienced cloud developers, we started coding up our cloud infrastructure in Terraform. About 6 months later the entire cloud pipeline was ready to go live.

We were still gathering our input data from on-premise systems, but the computational heavy-lifting was now taking place in the cloud. We use Dataproc to train our machine learning models and to handle the price optimization in a distributed way. Simultaneously, up to 2,500 cores are busy teaching ML models how to predict sales from prices, or to work out the best prices for roughly one million articles every day. Besides model training and price optimization, all core components of our application (e. g. services that haul the pricing results into databases or provide price-upload data for otto.de) are built as containerized Docker microservices. ETL processes, model training, price optimization, price upload and all other microservices are orchestrated by a Jenkins server that lives in a Docker container on a cloud VM itself. So our application mainly consists of a Jenkins Docker running dockerized microservices – and Dataproc jobs for model training and price optimization in between.

Today, our service delivers as many as 4.7 million prices per week. That’s roughly 24 times more prices than we delivered when we reached the limits of our on-premise resources. And the service is running smoothly now. Well, not always. But fairly often. We keep on working on it…

Putting it all together in a cross-functional setup

Ever heard the teams’ Data Scientists wonder why “the ETL guys need a whole WEEK to add two tiny new columns to the mart”? Well, the Data Scientists have probably never walked in their colleagues' shoes. Otherwise, they would know that (depending on the quality and documentation of the source) it can very well be a painful and lengthy procedure to add those new columns. In a cross-functional team setup, where the Data Scientists put on their work gloves and do the data-wrangling themselves, this will not occur. Neither will it happen that ETL Engineers feel stranded when the pipeline breaks while the DevOps specialists are on holiday, as they'll be able to fix it alone or maybe together with a Data Scientist.

We believe that good and effective teamwork is strongly supported by a cross-functional setup. Everybody in our team understands and values the work of the other, because we know about the subtleties and challenges that come with each role in the team. And everybody in the team can – at least to some degree – stand in for each other. The Data Scientist is off work sick? No worries, the DevOps can build the prediction model. This has a relaxing effect on both.

Improving our product

We believe the best way to improve the performance of our Dynamic Pricing service is to evaluate the team’s ideas on how to enhance the algorithm’s impact on business KPIs constantly. We do this through experimental studies. Our application easily allows us to run different versions of an algorithm on two or more homogenous subgroups of articles in the production environment on otto.de: while the articles in group A receive prices calculated by algorithm X, group B receives prices from algorithm Y. Thus, we can compare KPIs such as sales or earnings between groups and decide whether Otto will benefit from a revised algorithm. If this is the case, we set it to production… and start the next test. Test -> learn -> repeat. There are a lot of hypotheses left to explore!

Want to be part of the team?

0No comments yet.

Written by

Similar Articles

COSTNovember 12, 2024

COSTNovember 12, 2024How we used a simple trick to save USD 500,000 in data transfer costs

16Increased efficiency of cloud usage ✓ Cost reduction ✓ Migration to dual-stack IPv6 ✓ Find out more now!DevelopmentCloud DominikSeptember 30, 2024

DominikSeptember 30, 2024Developer Hacks – Modern Command Line Tools and Advanced Git Commands

114In this article, you learn more about a modern development setup, state-of-the-art alternatives to classic shell programs, and advanced git commands.DevelopmentWorking methods